On April 18 fellow blogger Michael Hutak published a note in the OLPC.Oceania blog pointing to the Australian Council for Educational Research(ACER) report on a 15-month-old deployment in the Solomon Islands.

New Solomons study puts hard evidence of OLPC's positive impact

An independent evaluation commissioned by the Solomon Islands Government (SIG) of the OLPC pilot projects in the remote Western Province has boosted calls to expand the program in the country and across the Pacific.

The full article is here, yet I beg to differ this is hard evidence.

"Hard evidence" means reproducible, objective data

What these guys have is soft and cuddly evidence, and presenting this kind of data as real research is core to OLPC's problem of credibility. AFAIK there is only one study ever done in OLPC matters that used objective data, and so far has been buried for almost a year (I am preparing a post on that).

All other "evaluations" I've seen, except that one, used surveys. It is very, very hard to get any sort of validity out of surveys, I should know, I do contract work in that field.

It is very easy to have surveys say something nice about the client, "independent" survey or not, and it could be disputed whether the ACER is actually independent on the goings-on of OLPC in the Pacific. The reason I can sleep at night is because so far my clients do care for real data, since their businesses hang on it.

They'd rather hear the bad and so-so news, so they can change things to be better for their clients. Good news is gravy to them, not what they really worry about when things get difficult, like right now things are in the US economy. Or like how things are for OLPC...

Why can't we see things that way? Why do we *pretend* all is rosy, when by listening, sharing and fixing a few problems we actually could have a chance to do something that *is* good, not just looks somewhat good to those who already have their pink sunglasses on?

Since our business model so far mostly hangs on goodwill and old friends, I guess we are too used to think we can get away with "feel good" reports that don't tell facts, they merely share other "feel good" stories. Not that there aren't good things to share, yes, we have success stories, we can and should communicate and make them known, but to get credibility we mainly do need "hard data", of which we have very little published.

Hard data is what all funding agencies ask for, and its dire absence the simplest excuse they need, to send us away empty handed.

Getting Hard Data

Hard data can come from Journal data mining. Grades. Testing results. Teacher logs. Server logs. Maintenance logs.

I do not blame the big funding agencies for staying away from supporting us. They have been gullible and burned too many times already to just take feel-good surveys as evidence of success. I know, some types of good, valid research often take time, sometimes it can get expensive, and certainly requires skill, and mostly the desire to tell the truth as it is.

Yet some data is already available, like maintenance logs. AFAIK, those exists (Wad said so) but their detailed content has never been made public, though it is often insisted XO laptops require very little maintenance. What websites are accessed is a simple matter of dumping the server logs, also AFAIK, except for one study in Argentina, never published. Neither needs much time or money, and the skill is available if the raw data were made accessible. What is missing, then?

All in all, this Solomon Islands thing looks like another infomercial, sorry, nice try. Please, can we have some objective data next time?

Yama,

I have a hard time understanding what are you arguing here. That the actual study and report is wrong and/or misleading or that that OLPC.Oceania's interpretation of this study is wrong?

I could go along with the second but I would like some more _data_ from you (since you are in the field) for the first.

And if there is nothing wrong with the study what's your take on the study's conclusions and recommendations?

Well said, Yama.

I'm glad to see the rare case of an OLPC fan who is also interested in finding out the facts. More poeple like you are needed.

Mavrothal, you are a good man that though disagreeing with what I say, and/or being confused by it, you kindly give me the benefit of the doubt. I hope to be better at that myself.

My peeve here is the method.

It has been said in science than the only thing worse than a lie is a waste of time. IMNSHO, opinion-based research, as is the case for this report, only tells us with some certainty what the interviewees think we want to hear, and thus, more than advancing knowledge, is mostly a waste of time.

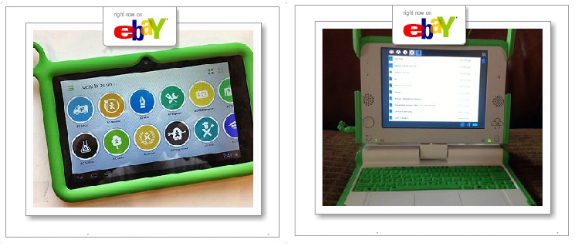

My take is that, because of the method, this study's conclusions are mostly irrelevant in terms of figuring out what the impact of OLPC is, beyond a feel-good shared experience. Thus it doesn't help to advance the efforts we are making to become credible. I mean, if it were a study of perceptions, fine, not that there is really anything new, though it's always nice to hear people love the green things. But, as a study of impact, and the assumption that asking people their perception will give us anything approaching valid measures of actual impact in learning, wrong method.

Thus, my recommendation, and thank you for asking, is that people doing research do focus on what can be measured objectively if at all possible. While it is an endless debate on ways to actually measure learning, we can somewhat be grandfathered in the assumption that classroom and curricular use of a given resource means that that resource is helping in the learning process. Thus, just an example, let's measure how much that given resource is used, doing what. Datamining the servers where Journals are backuped should do the trick for finding out a lot on what XOs are used for and when, or even, asking the kids to fill out a form with what they see in their Journal. But asking the kids, or the teachers, or the parents how much time they have spent doing what, introduces so many layers of uncertainty that any practical level of validity or credibility is totally lost.

Of course, some people might feel that such data is valid. Well, I don't, and it seems I am not the only one, or *hard* evidence would be so overwhelming that we could go to any funder or government and *tell* them to fund us or else prove to the world they're idiots. As things are, anyone can dismiss this kind of "evidence" an the hubris bearer.

That hurts the project, because we DO NEED evidence that is irrefutable, reproducible, and the only way to get that kind is to go for objective, hard data. I agree with you that the second alternative you present is a moot point and rather evident for anyone who understands what hard data means.

BTW, not "in the field" at the moment, hope to make it back there within a couple months.

@"Me" You got it, buddy. Wish it weren't so hard.

Yama,

I'm not disagreeing that we need hard data. We DO (and said it many times in the past).

But I went through the "study" and my understanding is that the intention was to address specific questions about the pilot, "ordered" by the department of education. Which it did with the proper manner eg querying interested/involved parties. (Besides would be totally ridiculous to draw any serious conclusions from samples of 20-30 individuals. You can hardly get standard errors :-)

Now why OLPC.Oceania decided to present it as "hard evidence" I can imagine..., what I do not understand why _you_ consider that the authors presented this as "hard evidence"? And that's what I actually asked!

Care to address it?

cle-ver. I've never been caught in a syllogism net before. Interesting experience. OK; it works this way. If someone comes up with a rose, and someone else takes a picture, and shows it to me, saying "this here is a picture of a tree", it still refers to a rose, since we know that a rose is a rose, even by any other name. Now, in fact and strict truth and logic, it *is not* a rose, it *is* a picture (whether it is a picture of a rose or of a tree becomes irrelevant, because the actual *object* is the picture). Pardon me, I won't read the study again to find the precise instructions given, sorry.

If I follow what you say, the problem is with whomever commissioned the study, then, not with those who followed instructions. But worse, the problem ia with the general feeling in the OLPC universe that it is OK to think of something like this as research. A weltschmerz (ha! I spelled that right :-)).

While you may not get standard error out of a small sample, that doesn't mean you cannot get objective data, which becomes a starting point for real data analysis. We have to start somewhere.

Thank you, I'll say it again, interesting experience, for a while I was worried.

BTW, there is one factual error in my note, this was nagging me, let me do a correction here. I said ""feel good" reports that don't tell facts". That is unfair and inaccurate. "feel good" reports *can* tell facts, like for example positive perception, anecdotes of effort and good work, and I have contributed with much joy to some such documents clearly labelled as happy stories of XO use. The meaning I was trying to get through if that's all we can call "research", that makes us lose credibility, because the full truth, as research should be made of, may and likely does also include vandalism or simply no meaningful use for learning, among others.

I do have some idea about research. And I do know that unless you look at the actual raw data and understand it, you can hardly evaluate them from first, second or third hand reports.

I also know that social studies can hardly produce evidence in the strict scientific sense. For one because the variables are hard to control or even to know, two because of the time frame involved and three because is almost impossible to repeat.

Finally I know that hard published research, quite often is plain wrong (not because of malice or neglect but because things are always more complex than we think). The easy example is Medicine and how "established' treatments/medication are changing or abandon after few short years.

The bottom line is that we'll probably never know _for sure_ if ICT in education is good or bad. We may get a good idea if a specific model works to some extend or if one is better than another, but the results will always be debatable.

Particularly in education what your best hope is I believe, is to provide some evidence that what you are doing is not obviously wrong(!), and some indication that may actually be beneficial. The fact that educational systems within one country are constantly changing and the differences from country to country (even when at similar level) are so big, is a testament to that.

So look again at the "study", which is actually a report, and tell me what you think (well, that's a figure of speech :-)

Hey guys,

I'm a bit new to OLPC but I'm still extremely intrigued by this article.

Have there been any studies with "hard" or quantitative evidence on OLPC?

In addition Yama, how would you recommend getting quantitative evidence from a pilot project (I'm referring to my own project, not the oceania one) where the observing period is only 4 months from the program initation date? I do agree that there should be some objectifiable data that can demonstrate correlation, but seeing as how many measures of education and learningare relative (correct me if I'm wrong here) - is there no way to avoid a qualitative study?

Thanks guys!

M.

@MephistoM and others

"In addition Yama, how would you recommend getting quantitative evidence from a pilot project (I'm referring to my own project, not the oceania one) where the observing period is only 4 months from the program initation date?"

Maybe it is helpful to look at it as a student assignment, say, as Bachelor thesis of some student. What would we accept from that student after, say 2 months of work?

First, attitude questionnaires and interviews. Obviously, it must be established how the users (pupils, teachers, parents) are feeling about XO use. That will guide the interpretation of the other results.

Second, time spend with the XO, both educational and private.

This can be done by asking pupils, teachers, and parents to estimate the hands-on time. That is tricky. Because you will need to determine how the actual times related to the reported times. Best to inspect the Journals and server logs. But there might be technical (ie, incompetence) and privacy objections to accessing the data of the children.

An alternative would be to observe a sample of (one?) children over some time and extrapolate from her reported and real hands-on time.

Another way is to do surprise visits and record what the children are actually doing at that moment. Then extrapolate that. Say, if every time you look into a classroom x% of the children are working on the XO, you can conclude that children spend around x% of their time working on the XO (on average). The same with house calls.

Third, pick a problem area and look, in depth, how the XO changed it, or not.

There is often (most of the time?) some poster problem that incited criticism of the educational system. Eg, truancy, concentration, sub standard reading or math skills etc. Look at how this problem has been affected by the program.

You cannot expect definite results in 4 months. But it is well known that skills correlate very strongly to hands-on time practicing. If children spend more time reading, they will read better. If they spend more time writing, they will write better, etc.

Try to get numbers about how much the pupils practice the problem skill. That is much more reliable than asking "do they spend time on the XO?".

You can ask teachers and children, eg, how many stories they have read or how many "pages" they have written. You can ask about home assignments. And ask to name examples. And you can compare this to what other children experience.

And more can be done with statistics than just lumping unstructured data from a thousand children. You really CAN get significant results from 15 test subjects. It all depends on what you ask and what you measure. Before/after is difficult in children, they change all the time. But it could be done over a short time period, eg, 4 months. You can also match children of two groups in pairs (gender, age, background) and do a pairwise test.

For example, if 13 test pupils read more than their matched control (or before) and 2 read less, you have alpha is 0.01 (significance level). And that would be the simplest of tests. With more detailed numbers, eg, reading level or completed homework assignments, you can do much more.

In short, nothing a bachelor student cannot accomplish in 2 months with 20 children.

Yama, "Weltschmerz" is a noun and should be capitalized in German ;-)

Winter

Welcome, MephistoM

Very little hard data has come up and AFAIK even less specifically as a study.

Thus you may be among the first!

As Mavrothal points out, the fewer datapoints you have, the least valid your interpretation will be. A short span gives less opportunities to enter a "routine" that might more accurately indicate real life use beyond the honeymoon newness of the XO, but IMHO I would think that anything after a couple months starts to be OK. Also, Winter points out that some valid data could be gathered even from one single instance, though as the interaction researcher-child increases, it can be suspected that it is that interaction that actually is making the good results possible - this argument has been used by some to put into question some of S.Papert's conclusions.

I have a difference of opinion with some suggestions Winter offers, which is that I believe that "asking them" is precisely the method to avoid, preferring some more objective sources, just looking over the window would be heaps better in terms of believability of the data. "the researcher saw the computers in use" is more real that "the researcher was told computers were used". And anyway we already have too many "ask" reports, we need more of the objective ones

Anyway, as to what you can actually do:

if your people can access the internet, figure out how to get the access logs. The connection goes through a server somewhere, which can record every single page request. In my case that is an option in my Netgear access point. Datamining that stuff can be quite enlightening.

If your Xos make a backup of everything to a server, that could also be looked at. I do not know how exactly, but then the data is there, so there must be a way, I hope, maybe someone can give us pointers.

Maybe you have no internet and no server. No prob! Make yourself a little form with two columns, Activity, and HowLongAgo. Ask kids to go to the Journal, and fill out exactly what they see. Collating that from many kids and over several weeks (a random day of the week would be good) will give you a snapshot of actual use, already better than anything we know of for certain. Since the form doesn't have a name, it can be quite private.

These are just a couple ideas. With adequate motivation I am sure we could come up with much better.

One word about concerns for privacy: Overstated.

Any decent teacher can be trusted to handle this right, and if you do not have decent teachers, that's one excellent reason to have someone look at the internet logs to see what kind of indecencies they are up to, some of them could hurt you and OLPC real bad.

Thanks a lot guys - this really helped a lot. I'll definitely take your recommendations into consideration, and Yama, I will try to look into the sites visited and time of use as possible measures of activity/use of the XO. This will give me both a quantitative and qualitative approach, and when my research is done, I'll make sure to post it in the news forum.

Just a final question: I know 4 months might not be enough to check for a correlation, especially since it is the first time the kids are using it and their lack of familiarity might be a confounder if there is a correlation. What kind of relationships would you recommend? For example, one that I thought of (but I need research to back up as well as creating an appropriate test) is that literacy will be improved by spending time on the XO. Would I be then able to maybe a dependent t-test (before and after) for quantitative and recording "how they feel/perceptions" as the qualitative?

*note: I'm sorry if I got any statistical knowledge wrong - still learning beginner's stats.

Thank you so much guys!

M

This evaluation was commissioned by the Solomons government with limited funds and restricted timing. It was intended as a formative evaluation to give some general indication that the project was "on track" and that it was producing impacts in line with the Ministry's objectives, which are given in a table at the back of the report. The three schools had not been completed with servers, had power only in the evening and with limited training for teachers - all because there was insufficient funds available. We did indeed suggest adding activities such as classroom observations, inspection of journals (which seems to be a vague science - pleased if you can tell me how to automate that) and some of the good constructive suggestions that the author of this blog does provide. However, time and funding did not allow us the luxury of that wider scope. The evaluation was indeed independent, and the Ministry now has grounds to make decisions on extending the trials with increased confidence. We can now look towards a second round of M&E with a "harder" more robust approach, which is what is planned. It would be good if the author of this blog who obviously feels he could do better could imagine the restrictions faced in the real world (especially places like the Solomons where we have huge logistical challenges and massive competing priorities for instance), and offer to help with substantive inputs into the design of the new evaluation framework instead of piling on the negativism which could do a lot of damage to the small progress we are making in our region.

After reading through the comments I need to add to my post above. I think that this is a very valuable discussion. I need to explain a bit more about the context. Firstly I should say that although I helped the Ministry to set up the three trials, I was not involved in any way in the evaluation. However, I do know that it was very difficult under the circumstances in which the trials evolved, to be able to acquire the baseline data on which a truly objective study could be undertaken. Firstly, the trials did not happen as a fully completed deployment all at once, but rather a much more piecemeal process; only a small group in the Ministry were working on this with little technical assistance and initially only limited appreciation of what the OLPC package offers as a whole of sector way of introducing ICT for education. Thus, curriculum were not involved, for instance. So, there were a lot of limitations imposed. We did have a chance to suggest collecting data from journals, and to acquire usage data with a more statistical nature but (ACER determined) the time limitations ruled that out. We have feedback from the Principal at the school concerning improved exam results. But without the baseline data that was only anecdotal. What we have at the end of the process, however, is greatly increased awareness and buy-in across the whole sector, which will allow us the luxury of having a robust baseline on which to evaluate results in the future. The report is really more of an internal one, and was never expected to demonstrate hard evidence of improvements in educational efficiency. However, now we have reached this stage, we can certainly move forward, and all the suggestions given in the comments above are very useful and welcomed, as is the chance to have this debate. So to summarise, I should not have been so reactive in my initial post, but people might appreciate that approaches to evaluation often have to be aligned with the state of maturity of the project, and that includes all kinds of issues within the education sector, such as awareness and inertia as well as funding and technical capacity. Thanks.

Hi All,

This debate seems to becoming a bit polarised.

Yama, there is as much doubt about the value of gathering your "hard" data as there is about gathering the "soft" data

For example, just what does it mean that 16 children spent 4 hours using the memorise activity or surfing such and such a site?

IMHO,it means little without discussing the experience and the learnings with the children, a process which is part of teaching I believe.

So it is important to get the teachers view through surveys and interviews.

If one looks at the body of evidence about research into ICT in education, there are very diverse views about the way to conduct it and the value of the results.

In the end, the research must be conducted to help decision makers and in the case of the Solomons, the Ministry of Education developed a M&E framework before the project started.

I think it is a bit mean spirited of you to criticise them for doing this.

The report was "fit for purpose" and appropriate, considering the nature of the project

Ian