As a relatively new organization, One Laptop Per Child awaits in-depth longitudinal assessments of the program from our partners. Existing literature, however, produced by partners and independent organizations, provides insight into the initial stages of various OLPC projects throughout the world.

OLPC Review of External OLPC Monitoring & Evaluation Reports

Evaluations of programs on the ground have been coordinated by a host of diverse organizations with the assistance of government actors and groups. Through the variety of different mechanisms for evaluations we have gained understanding of how the XO laptops adapt to work for children in different environments; each evaluation report reflects the rich diversity of each community experience.

The methodology, timing and conduct of the evaluations have been affected by the variations in project implementation models: affirming the individualized approach in the evaluation process so as to reflect program implementation based on the country and region of deployment. Therefore, the findings from existing evaluations range from anecdotal to quantitative, when addressing the specifics of child usage, family impact, and teacher experience.

The findings are also largely positive in nature; the impact on childhood education has been considered successful overall, with the use of laptops at home and in the classroom, and we eagerly await more data on specific improvements by subject, by region, and by timing and context.

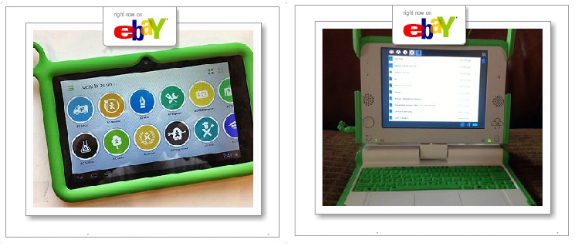

Future recommendations for OLPC based on evaluations thus far most often concern technology improvements related to battery life of the computers, hardware concerns, and facilitating software development. In many remote regions of the world where OLPC operates, limited access to electricity and Internet connectivity can directly impede achieving the full potential of learning opportunities with the XO laptop. OLPC has enabled a solar panel and hand crank to counteract energy concerns and continues to address technical issues as they are reported back from distributions.

Beyond the technical, other suggestions largely focus on improving capacity and knowledge building programs for teachers and children alike. Calls for more training, greater coordination in XO distributions, and overall preparation plans for adopting XOs to classroom use have been strongly voiced. Families and community members have also voiced a desire for more training to assist children at home and in school.

Fortunately, however, the ease of learning and education in the informal sector has been flourishing. Community feedback and the demand for more training programs is a large indicator that the laptops are contributing to the active community engagement and broader societal interest in the future of childhood education.

Monitoring and evaluation of the wide array of OLPC programs is an exciting learning opportunity for OLPC. We look forward to continued work with our partners to understand how technology creates educational change.

The abstract from Review of External OLPC Monitoring & Evaluation Reports by the OLPC Learning Group

That is one of the least convincing reports I have read in a long time, perhaps ever. I don't have time to go through the whole thing, so I'll pick out one section: "Attendance and Access". School attendance is one of the easiest things to measure since records of it are frequently kept (not always, but often). For example, the first program mentioned in that section is in Birmingham City Schools, Alabama. As a public school district, Birmingham keeps definitive records of its attendance.

Why is it then, that neither for Birmingham nor for any other program mentioned, are there any quantitative data listed to support the claim that attendance has increased? And, if such data are not available, shouldn't that give the authors of this report pause in concluding that attendance has indeed risen?

The range of studies included is also not very impressive. For example, a very brief second-hand press report on the Birmingham program is included, but two of the three national reports on Plan Ceibal in Uruguay are left out, as is the study by the Interamerican Development Bank of the program in Peru.

It's good, I suppose, that OLPC is trying to follow up on monitoring and evaluation at all, but efforts like this are not very helpful (unless the goal is simply to put a pretty face on the program.)

You're a bad person, Warschauer. You hate kids, Warschauer.

Shame on you, Warschauer!

It's immediately apparent that the "report" is pure BS when they use the word "longitudinal" just to make it sound more scholarly.

What's the difference between

"One Laptop Per Child awaits in-depth longitudinal assessments of the program from our partners"

and

"One Laptop Per Child awaits in-depth assessments of the program from our partners."?

BS, specifically designed to fool the few poor souls still drinking the "$200 Koolaid Per Dummy" poison.

the word does change the meaning:

http://en.wikipedia.org/wiki/Longitudinal_study

greetings, eMBee.

Of course, the word does change the meaning, but the word does not apply to OLPC - that was the point. "Longitudinal", as explained in the Wikipedia reference, means over "a very long time". And that does not apply to OLPC's two-minute studies dealing mostly with anecdotal data ("parents love the laptop") or outright lies (like the much-touted "30% attendance increase in several places").

This review of existing external literature on OLPC projects was written with the intention of summarizing the growing body of work that has been undertaken by interested partner organizations. Personal interest and the desire to learn about existing projects on the ground inspired this research, and the review was subsequently made public upon request from many interested parties.

Initially written and compiled in June 2010, this review pulls information from publicly accessible reports on OLPC projects globally. This is not in any way meant to be a final product, but rather an ongoing research endeavor, which will include more reports as they are written. I very much hope that it inspires the sharing and disseminating of more evaluations and reports on OLPC projects as well as other existing projects with children and technology in the developing world.

OLPC does not conduct formal evaluations of XO deployments. Objectivity would, and should, be called into question if a non-profit organization carried out evaluations of their own projects, since there is a clear vested stake in its success. For this reason and many other reasons, monitoring and evaluation is strongly encouraged by local actors and partner organizations in order for them to evaluate the success of the project based on their own community needs and objectives. This review attempts to highlight these exact approaches to monitoring and evaluation in order to make those efforts more transparent and inspire additional debate on M&E and its importance to international development work.

More scientific and empirical approaches to evaluations would be of a tremendous benefit to all of us invested in this mission for childhood education. Because children have not been monitored long enough into the future, it is difficult to say what impact, if any at all, using laptops for education could have on dropout rates, job skills and hiring prospects, literacy acquisition, economic development and so on, but a longitudinal (one that follows students with XOs for a long time!) study, which may very well come from Uruguay first, is being eagerly awaited. I strongly encourage anyone who has access to additional literature that I may have missed in this review to pass it along!

Zehra, thanks a lot for the information and explaining the context of the review! :-)

Thanks for the explanations. I will try to add some further comments when I have time. In the meantime, here are some important studies that may not have been in your report (perhaps due to the date of publication or perhaps due to the fact they are in Spanish):

Plan Ceibal. (2010). Síntesis del informe de monitoreo del estado del parque de XO a abril de 2010. Retrieved September 14, 2010 from http://www.ceibal.org.uy/docs/Plan_Ceibal____Informe_Estado_XO__Abril_2010.pdf

Salamano, I., Pagés, P., Baraibar, A., Ferro, H., Pérez, L., & Pérez, M. (2009). Monitoreo y evaluación educativa del Plan Ceibal: Primeros resultados a nivel nacional. Retrieved June 21, 2010 from http://www.ceibal.org.uy/docs/evaluacion_educativa_plan_ceibal_resumen.pdf

Santiago, A., Severin, E., Cristia, J., Ibarrarán, P., Thompson, J., & Cueto, S. (2010). Evaluacíon experimental del programa “Una Laptop por Niño” en Perú. Washington, DC: Banco Interamericano de Desarrollo.

Also, Morgan Ames and I have a major piece on OLPC coming out in the fall/winter 2010 issue of Journal of International Affairs. It should be available about December 6 at http://jia.sipa.columbia.edu/. You may find it of interest.

Hello Mark,

I read the abstract from your article at Journal of International Affairs,

Do we have to purchase the volume to read the complete article?

I would very much like to read it.

Thanks

Unfortunately it's hard to even figure out from their site how to purchase it. Email me at markw@uci.edu if you'd like a copy.

Hi Zehra

I'm unsettled by the idea that NGOs shouldn't monitor the impact of their projects because they have vested interests. This means that NGOs are freed from accountability to the providers of their funding, whether governments, institutions or individuals. I suspect Oxfam has a very different take (and methods: http://www.oxfam.org/en/about/accountability ) on this issue.

The PDF Review mentions that it uses no one single method to collect data, which concerns me as this leaves these assessments of the OLPC project open to observer bias and 'cherry picking' data that will make OLPC appear effective whether it is or is not.

Is there a reason why OLPC as an organisation has decided to forego self-monitoring?

HT

Hi HT,

I 100% agree with you. Accountability is absolutely crucial and I believe that Oxfam takes a responsible and reasonable approach. I also agree with your point about the methods and cherry-picking positive anecdotes, but I think for the purpose of this paper that provides interesting and unique insights into the projects, while in no way does it purport to be a scientific analysis.

I think the difficulty in this context with OLPC is that the projects are not our own, we don't choose the schools, the community, the methods, or the objectives - we facilitate access to the XOs and introduce them as learning tools. I cannot speak for all projects around the world as I did not participate in them, but in the West Bank my primary role was to support the integration of the XO program in the UNRWA schools and develop partnerships, but all of the program specifics were determined by the partners, in this case UNRWA. I would love it if UNRWA carried out an evaluation of the impact of XOs in their schools, but unfortunately I am not allowed to do so. Nor could I use that data as an example to make a blanket judgement about the successes or failures of any XO laptop project or OLPC in general; the project is different in every community.

I think project evaluation in general is absolutely crucial, but that's also a separate task from non-profit accountability. Figuring out whether XOs are successful for international children's education and non-profit social accountability to funders would take on different shapes and methods of evaluation, with the former being in my current interest and the interest of this review.