Cynthia, the daughter of my friend Sue, has cerebral palsy and uses a small touchscreen with picture icons to speak. Sue explained that this is a costly piece of equipment at $6,000, with a $400 fee when it needs service - expenses that many middle-class families with special needs children in even the developed world cannot afford.

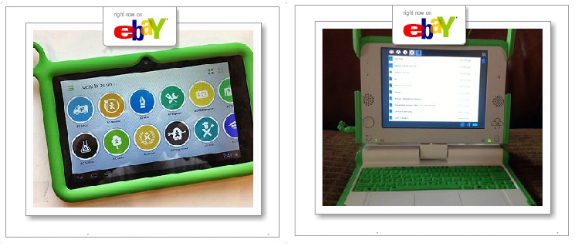

I had just received my OLPC through the Give One Get One program and had a sense of curious excitement about this new platform, and little idea of the possibilities. "Hi Matt, what's that thing?" asked Sue. The green laptop had caught her eye. After I explained, she immediately thought, "Could this help my daughter?"

Based on Cynthia's use of other computers, Sue expected that she could probably use the OLPC's touchpad, and possibly the keyboard. After a quick web search, I used the built-in Espeak utility from the command line to make the OLPC say, "Hello, this is a test of speech synthesis."

The missing piece was a software interface to allow Cynthia to select concepts to speak through a menu of icons. Free Icon-To-Speech is that missing piece: it is an open-source software Activity designed to transform the OLPC into a Augmentative Assistive Communication device with more functionality and ruggedness than devices that cost over ten times as much.

Development of Free Icon-To-Speech started at PyCon 2008 when I posted a card on the huge bulletin board for after-hours open space meetings that read "OLPC icon-to-speech for people with Stephen Hawking-like needs." There were no other topics like it on the board, so I was unsure if it would get any interest. Throughout the next day, I nervously wondered if anyone would show up.

Four people did, and two stayed afterward into dinner. Tony Anderson had already enabled the classic Eliza Turing Test software for speech synthesis on the OLPC. Lisa Beal had previously worked on software for people with disabilities. We were the longest-running table at the restaurant that night, our conversation covering everything from zoomable user interfaces to the most important concepts used in infant sign language. Two big questions remained:

- "Could people with Cynthia's level of limited motor skills use the OLPC touchpad and buttons?"

- "Where will we get quality icons we can freely distribute?"

Mel Chua, another OLPC volunteer read the description and said, "It looks good - put it up! It's good to see someone working on OLPC accessibility." (FreeIconToSpeech on the OLPC Wiki)

For a project just out of conception, I was amazed at its momentum.

The following week, my partner Annie and I met with Cynthia and her parents, Sue and Jay. I entered some text into the Speak activity on the OLPC as an example, and while I was explaining it to Sue and Jay, Cynthia typed in her Special Olympics cheer and then played it. This interrupted our conversation, and we all cheered.

While her parents and I continued to talk, Cynthia launched a music application and started to play music. She did this more quickly than I had a few days before. We all cheered again. The question of Cynthia being able to use the OLPC without a touchscreen was answered. Before I packed up to leave, I checked my email to find Lisa's code of example functions related to our planned GUI (graphical user interface); we were all really excited.

Within a few days, Tony posted a first prototype on the OLPC wiki. Encouraged by the progress, Jay and Sue bought Cynthia an OLPC for her birthday. Cynthia called me regularly in the evenings to say hi, so I kept her up to date on the project's progress as I merged Tony's third prototype with the example functions Lisa sent that allowed zooming of icons of common graphic formats. Then I added a basic ontology of 150 concepts and icons.

This was enough to be somewhat useful for daily living, so I scheduled a test with Cynthia. The day before the test, I discovered that although the new version ran fine on my desktop computer, it would not speak on the OLPC. We went ahead with the test anyway, hauling along my desktop computer and parts. The tests went quite well, covering basic needs, food, and daily living. Even with only 150 concepts, you can assemble some pretty funny statements, like "play ghetto blaster baby baby baby." Before you ask, yes, "ghetto blaster" is the actual, original, unaltered name from the icon set - included in the test just for fun.

The OLPC non-speaking issue turned out to be memory related: the underlying Espeak utility appeared to need a significant amount of memory, but only for a very short period for synthesis. I refactored Free Icon-To-Speech to compensate, and installed a working alpha version of the code on Cynthia's OLPC; she is now using it.

Meanwhile, purely through word of mouth, many people began expressing interest in the project for specific needs ranging from stroke victims to language learning and cross-cultural communication. The non-profit organization Voices for Peru sent an invitation to set up Peruvian children with OLPCs and Free Icon-To-Speech in the coming months. Shortly after that request, I finished completely rewriting the code for more memory efficiency, readability, and design patterns.

Free Icon-To-Speech is now usable, but still requires a lot of help to make it into a polished package ready for deployment. We need the skills of:

- Organizations, groups, and people who can help us find, create, and/or donate openly licensed icons.

- Device driver specialists to help us create a procedure for touch panel (and other pointing device) driver installation.

- Programmers to help us implement more features within the open-source software stack.

In the past, I've often wondered how to find projects that were meaningful. Coincidentally, this one found me. Designing software and hardware for the disabled is a way for the open source community to make a profound impact. Please join us in contributing to these technologies so that all people, regardless of disability, will be able to use their computers as tools to explore, create, learn, and communicate.

Matt Barkau is the principal driving force behind the Free Icon-To-Speech project.

"While her parents and I continued to talk, Cynthia launched a music application and started to play music."

I cried when I read this.

Well done Matt.

Yes, this is an important class of applications which is mentioned periodically on open source in education mailing lists, but I've never been able to get anyone to explain exactly what the requirements are, so it has lingered as a really important sort of educational app that developers don't understand. So... kudos! This is important, and I'm glad you've found some traction with it.

Public domain icons:

http://www.openclipart.org/

It would be interesting to combine the icon-to-speech app with Dasher[1] (an input program for mobility-impaired users) to yield a program that would allow refinement of the icon-concepts. The top level could be Maslow's hierarchy of needs[2], with further refinement so one could drill into things categorically (e.g. "Physiological -> Food -> Eat -> Favorites -> Pizza -> mushroom" or "Love/belonging" -> "Hug").

[1]

http://www.inference.phy.cam.ac.uk/dasher/

[2]

http://en.wikipedia.org/wiki/Maslow%27s_hierarchy_of_needs

Thanks Gumnos; we've planned both of those ideas into the project - feel free to comment further on the needs categories (also rationalized with those of Neef) on the OLPC Wiki page at http://wiki.laptop.org/go/FreeIconToSpeech

I'm Cynthia's mom. This is so cool to

see the responses and to know there is

such a need.

Tonight we went to a Thanksgiving Eve service and Cynthia brought her OLPC. She is really excited about what is going on and is very motivated when she is aware of others who are excited about her using this device.

Thank you to everyone!!!

Years ago I helped a friend with a card for the Apple II which facilitated his communication while ALS took away his normal facilities. He controlled a letter-word-concept selector by blinking his eyelid. Yesterday I learned that another friend was just diagnosed with ALS. I hope your project gets plugged in to the needs of such adults as well as for kids.

Nice job so far.

This is an exciting project. A friend's daughter was just set up with a small computer for aiding communications, but it is very cumbersome and, in my opinion, challenging to learn- her parent's can't help her with it...

one of the things I noticed with the device is that, though the child is now 18 years old, the device is geared to a child under 10 years. I agree that it would be great to include adult needs in the project- and voice synthesis.

Additionally, the computer was rediculously expensive. Being able to modify a laptop that the entire family can use at different times, or to have this technology available in an inexpensive laptop such as this, is exciting enough to make me cry! I think about the kids out there who's families don't have the kind of insurance my friend has and wonder how those kids ever build a network beyond their families!

Without a relatively easy way to communicate, there's little opportunity to grow and learn, not only about themselves, but about other people who touch their lives. Good job! I look forward to seeing how this project progresses!